Kyverno and Kubecost: Real-time Kubernetes Cost Management

You’ve probably heard “policy for Kubernetes” a few times at this point and may take that to mean a way to improve security. While that’s definitely true, policy in Kubernetes is far more vast than just security. In this post, I’ll show how Kubernetes policy-as-code driven by Kyverno, paired with Kubecost, can not only provide more cost visibility but actually help prevent cost overruns before they ever occur. How’d you like to be able to show this to users creating expensive Deployments?

This Deployment, which costs $78.68 to run for a month, will overrun the remaining budget of $45.43. Please seek approval or request a Policy Exception.

Why Policy in Kubernetes

At its most basic, policy is simply a way to establish a set of rules–a contract–between systems and people. That contract can be written many ways and with many goals. The quintessential goal of most policy in Kubernetes is security, that’s true, but outside of security policy can do things like enforce your own governance standards, apply default values to resources, even deliver multi-tenancy! The term policy-as-code means that these types of policies are written as code, typically standard YAML in the case of Kubernetes, and often stored in a Version Control System (VCS) such as git.

Policy is all around us today in Kubernetes, and chances are you’re using some form of it today. There are built-in resources which implement policy such as NetworkPolicies, ResourceQuotas, and LimitRanges to name a few. When it comes to policy specifically for Pods and, broadly, when dealing with their security concerns, we have PodSecurityAdmission and, previously, PodSecurityPolicy. These are all built-in options. But there exists another class of policy which Kubernetes delegates to external systems known as dynamic admission control. This is where the real power lies and where we’ll focus in this blog.

Cost Drivers in Kubernetes

When it comes to cost drivers in Kubernetes–the specific things which cost you money–we’re typically talking about two large classifications. First, there are the workloads running in the cluster itself. These are Pods, the atomic unit of scheduling, and the containers they serve. Pods are almost always created by higher-level controllers such as Deployments, StatefulSets, Jobs, and others. Pods consume resources and those resources must be provided by nodes which comprise the cluster.

The second class are resources created outside of your cluster in service of those workloads running inside the cluster. Examples of these include a load balancer which directs your customers to those workloads or a storage bucket in your public cloud provider which contains files also needed by your in-cluster workloads. These can be shared or dedicated resources. No matter what the driver, they cost you money to deploy and run and, if not checked and managed carefully, can very quickly end up costing you way more than you bargained for. It’s critical to be able to see and understand how they interrelate for without this, you cannot make smart decisions about where to best spend your money.

Reactive vs Proactive Cost Controls

Now that you understand the drivers, you should understand the options available to you for their control. Reactive cost control is about giving you the information–the data–about where your money is or was being spent. The idea there being that, if granular enough, arming you with this information will result in you making smarter decisions in the future with the hope it saves you money. Reactive cost control usually comes in the form of monitoring, alerting, reporting, and maybe even recommendations.

Proactive cost control, on the other hand, is about actively preventing that spend before it occurs, by stopping it at the source. In order to make these important decisions, proactive cost control may rely on a form of reactive cost control to ensure an accurate decision and enforcement can be made. Combining both is essential for a successful FinOps strategy.

Introducing Kyverno

Kyverno is a policy engine for Kubernetes which allows you to write your own custom policies using standard YAML as opposed to a programming language. Kyverno is a CNCF incubating project and in recent years has risen to become the favorite policy engine of choice amongst Kubernetes users. In addition to its model of simplicity, it is also the most powerful and capable policy engine for Kubernetes with its abilities to validate, mutate, generate, and clean up resources in the cluster as well as verify and maintain software supply chain security. Kyverno also recently added the ability to validate any JSON for use cases outside of Kubernetes. Other similar, but less capable, policies engines also exist in the CNCF including OPA Gatekeeper and Kubewarden. For a comparison between Kyverno and Gatekeeper, see my popular blog post here.

Introducing Kubecost

Although Kubecost probably needs no introduction, to recap Kubecost is the leading cost visibility tool for Kubernetes allowing you to monitor and reduce your Kubernetes spend through its powerful introspection abilities. Kubecost is able to deeply understand what resources are being consumed and where to provide you with insights such as dashboards, reports, alerts, recommendations, governance, and even automation, in real time, to help you reduce and control that spend. And in addition to granular Kubernetes costing, Kubecost also provides cost visibility for your cloud resources helping to tie the two together in a comprehensive picture.

Use Cases

Let’s look at some concrete use cases where this combination of policy through Kyverno and Kubecost can help deliver both reactive and proactive cost controls. We’ll do so by looking at how we can gain visibility into and ultimately manage spend for the two cost drivers described above.

Labels for Visibility

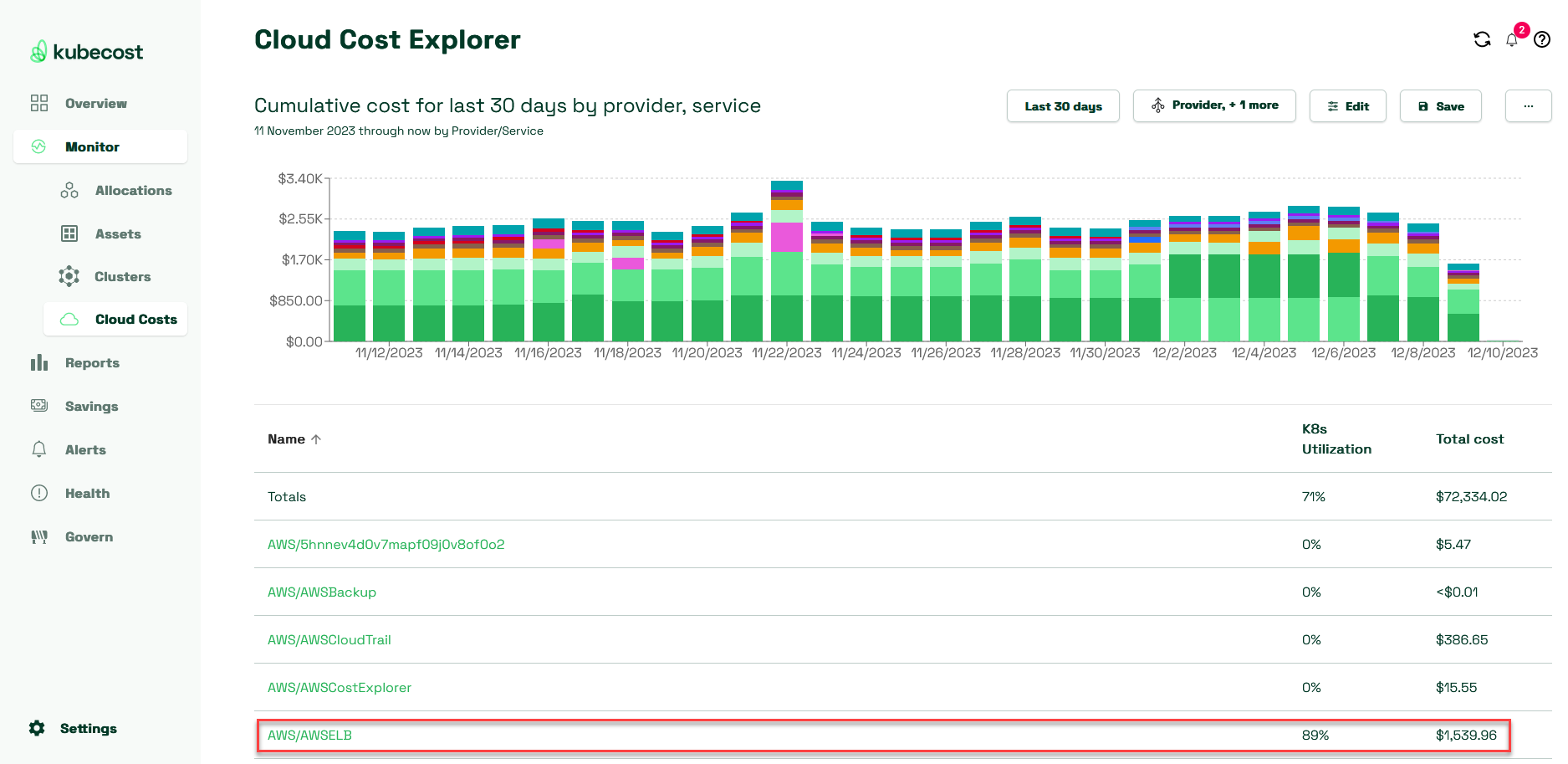

For the first use case, let us consider how we can ensure that Kubecost is able to accurately provide you with the current cost figures.

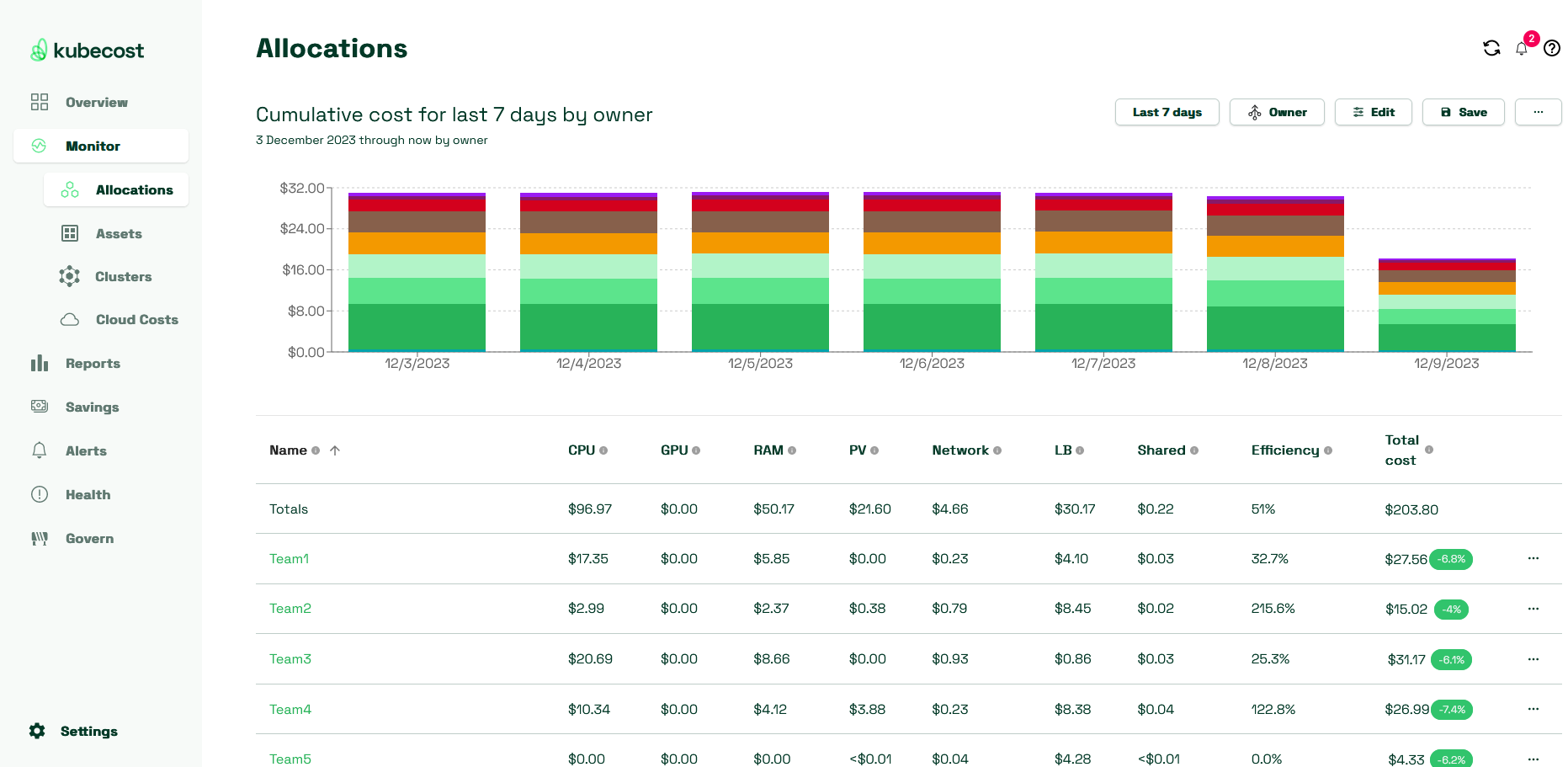

Kubernetes is fairly vast and there are several strategies which can be used to group workloads. From the highest, we have clusters themselves where sometimes even separate products receive their own cluster. And down to the lowest, we have metadata such as labels. Clusters more commonly serve multiple types of workloads, so Kubernetes labels are very often the organizational method of choice to attribute cost centers. Labels with names such as “team” and “owner” are used on workloads so that Kubecost may scrape and present to you the costs associated with them. For example, you may want to see how much the engineering team spent in the last six months running their workloads or who owns and how much Jenny Smith is spending on hers. In order to ensure Kubecost can accurately answer those questions, we have to ensure these labels are present to begin with. If they are not, it’s not that the workload isn’t costing you money–it most definitely is–it’s that the workload is invisible which can be a real problem. We can address this with a simple policy to ensure that all Pods introduced in the cluster have these labels and with some value.

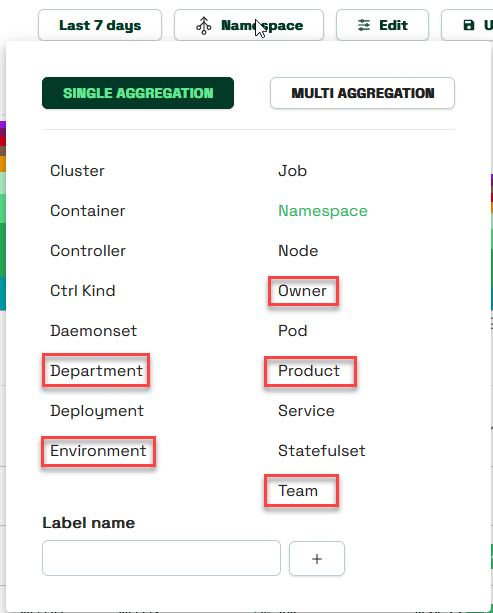

Kubecost offers several default aggregations–views–by which you can organize your costs. Those pointed out below map onto specific labels.

Of course, you’re welcome to select your own labels as well as customize the mappings for these default ones.

Now let’s see how policy can help ensure these labels are present so our allocated costs are always accurate and nothing is allowed to fly under the radar. This is where we’ll pull in Kyverno.

To start, get Kyverno installed. Like Kubecost, it’s quick and easy to install with just a single helm command.

helm install kyverno --repo https://kyverno.github.io/kyverno/ kyverno -n kyverno --create-namespace

With Kyverno installed, let’s introduce a Kyverno policy which can ensure that the owner and environment labels are present. We’ll stick to the Kubecost defaults which map these as owner and env, respectively, although, once again, you can add, remove, and change these to your delight.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-kubecost-labels

spec:

validationFailureAction: Audit

background: true

rules:

- name: require-labels

match:

any:

- resources:

kinds:

- Pod

validate:

message: "The Kubecost labels `owner` and `env` are all required for Pods."

pattern:

metadata:

labels:

owner: "?*"

env: "?*"

Even if you’ve never used Kyverno before, owing to its simple style of writing policy, just about anyone can write and read a Kyverno policy and instantly be on the same page together. This ability can be hugely important for all stakeholders to come together and understand these contracts. This policy simply says, as the message already indicates, that owner and env labels are required for Pods. A couple other fields to point out here are validationFailureAction, which tells Kyverno how to behave when a Pod violates the policy. By setting it to Audit we tell Kyverno to let it pass but to record it in a journal for us to inspect. More on that in just a moment. The other field is background which, when set to true, instructs Kyverno to periodically scan the cluster and see if anything has changed without its knowledge.

After creating this policy, assuming we’ve got some workloads in our cluster which are “good” and have both of these labels and some which are “bad” and do not, we can see the results.

First, get the policies back and make sure it’s in a ready state.

$ kubectl get clusterpolicy

NAME ADMISSION BACKGROUND VALIDATE ACTION READY AGE MESSAGE

require-kubecost-labels true true Audit True 15m Ready

One of Kyverno’s hallmark abilities which helps provide visibility into the operation of policies (and therefore an important aspect of cost visibility) is its ability to create policy reports. A policy report is a Kubernetes Custom Resource which records the results of applying Kyverno policies to resources just like Pods. By installing the above Kyverno policy, we get policy reports created automatically by Kyverno. The reports contain the results of comparing existing Pods to the Kyverno policy which requires the two labels. These results tell us which of our Pods meet the policy definition (i.e., have the labels) and those which do not.

Let’s look at some of these reports now.

Here, we can see all the policy reports Kyverno created in the engineering Namespace as a result of creating that policy. We can see from the output there are reports for Pods but also their higher-level controllers such as ReplicaSets and Deployments. Why is that? Another helpful Kyverno ability it provides is rule auto-generation, allowing policies written for just Pod to be automatically translated to their controllers such as Deployments, StatefulSets, Jobs, etc.

$ kubectl -n engineering get policyreport -o wide

NAME KIND NAME PASS FAIL WARN ERROR SKIP AGE

c61f9f68... Deployment alwyn-eng 0 1 0 0 0 25s

267332e2... Deployment falcon-eng 0 1 0 0 0 25s

fb0325d5... Deployment prescott-eng 1 0 0 0 0 25s

2243f112... Pod falcon-eng-74f887d48d-nnbwx 0 1 0 0 0 25s

420aeba6... Pod falcon-eng-74f887d48d-vmf9v 0 1 0 0 0 25s

44244e0f... Pod alwyn-eng-56cc6569dd-6thl6 0 1 0 0 0 25s

567bf814... Pod alwyn-eng-56cc6569dd-zcsrt 0 1 0 0 0 25s

b18b11db... Pod prescott-eng-5744875f78-bmb6k 1 0 0 0 0 25s

3529b977... Pod prescott-eng-5744875f78-6lwzn 1 0 0 0 0 25s

1bf28982... Pod prescott-eng-5744875f78-gkpk6 1 0 0 0 0 25s

54e06344... ReplicaSet alwyn-eng-56cc6569dd 0 1 0 0 0 24s

b9751a7a... ReplicaSet prescott-eng-5744875f78 1 0 0 0 0 24s

478b73a4... ReplicaSet falcon-eng-74f887d48d 0 1 0 0 0 24s

Let’s inspect one of these reports for an example “good” Deployment called prescott-eng.

kubectl -n engineering get policyreport fb0325d5-b46b-478e-b7b3-4fab656758b8 -o yaml

Below, you can see the full report Kyverno created indicating this passed.

apiVersion: wgpolicyk8s.io/v1alpha2

kind: PolicyReport

metadata:

creationTimestamp: "2023-12-09T20:40:01Z"

generation: 2

labels:

app.kubernetes.io/managed-by: kyverno

name: fb0325d5-b46b-478e-b7b3-4fab656758b8

namespace: engineering

ownerReferences:

- apiVersion: apps/v1

kind: Deployment

name: prescott-eng

uid: fb0325d5-b46b-478e-b7b3-4fab656758b8

resourceVersion: "314764"

uid: 987d6af0-1fcc-4cff-9e6f-84c99bec8668

results:

- message: validation rule 'autogen-require-labels' passed.

policy: require-kubecost-labels

result: pass

rule: autogen-require-labels

scored: true

source: kyverno

timestamp:

nanos: 0

seconds: 1702154421

scope:

apiVersion: apps/v1

kind: Deployment

name: prescott-eng

namespace: engineering

uid: fb0325d5-b46b-478e-b7b3-4fab656758b8

summary:

error: 0

fail: 0

pass: 1

skip: 0

warn: 0

Similar reports with failing results exist for some other Deployments without all the required labels.

Policy reports such as these help us with reactive cost control because they allow the cluster administrator to see all of the current resources in violation. It also enables developer self-service because policy reports can be entitled using RBAC, allowing each developer to see how their own, individual resources are doing without causing disruption. This is an excellent first step, but let’s now move this to the proactive stage and help prevent these invisible costs before they start.

Modify the policy to change the value of the validationFailureAction field from Audit to Enforce and apply it now. Check policy status once again and ensure it is in Enforce mode.

$ kubectl get clusterpolicy

NAME ADMISSION BACKGROUND VALIDATE ACTION READY AGE MESSAGE

require-kubecost-labels true true Enforce True 17m Ready

With the policy now in Enforce mode, should future Pods or their ilk be in violation they will be denied. This is simple to test with the below command in which we’ll define owner but not env. According to the policy, this should be disallowed.

kubectl run product-alpha --image busybox:latest -l owner=chip

After attempting to run this command, you should find that Kyverno has prevented the attempt because the env label was not present.

Error from server: admission webhook "validate.kyverno.svc-fail" denied the request:

resource Pod/default/product-alpha was blocked due to the following policies

require-kubecost-labels:

require-labels: 'validation error: The Kubecost labels `owner` and `env` are all

required for Pods. rule require-labels failed at path /metadata/labels/env/'

So, as we can see here, Kyverno can help first provide visibility into where your resources are which may not show up in your aggregated view of choice but can later ensure that is the case by not allowing those into the cluster in the first place. If you are interested in a graphical dashboard tool which allows more convenient and user-friendly display of these Kyverno policies, take a look at the open source Kyverno policy reporter.

Let’s now take a look at how we can extend some of this behavior to cloud costs.

Controlling Cloud Costs

Kubernetes clusters very commonly need adjacent infrastructure such as networking and storage to complete a full application stack. When running in cloud providers such as AWS, Azure, and GCP, there are special integrations which allow Kubernetes to request these resources from the various services offered by those providers. For example, if you’re running in AWS EKS and require external traffic to enter the cluster and be serviced by Pods, you will likely need a Kubernetes Ingress resource or a Service resource of type LoadBalancer. Provisioning of this Ingress resource in the cluster will result in provisioning of an AWS Application Load Balancer (ALB) in your AWS account; provisioning of a Service type LoadBalancer will result in a Network Load Balancer. As you read earlier in this blog, you know that these cloud resources are one of the cost drivers and so they must too be controlled.

And, by the way, the problem can be even more complex when you start introducing custom controllers such as Crossplane which can provision just about any type of infrastructure, even fully custom stacks!

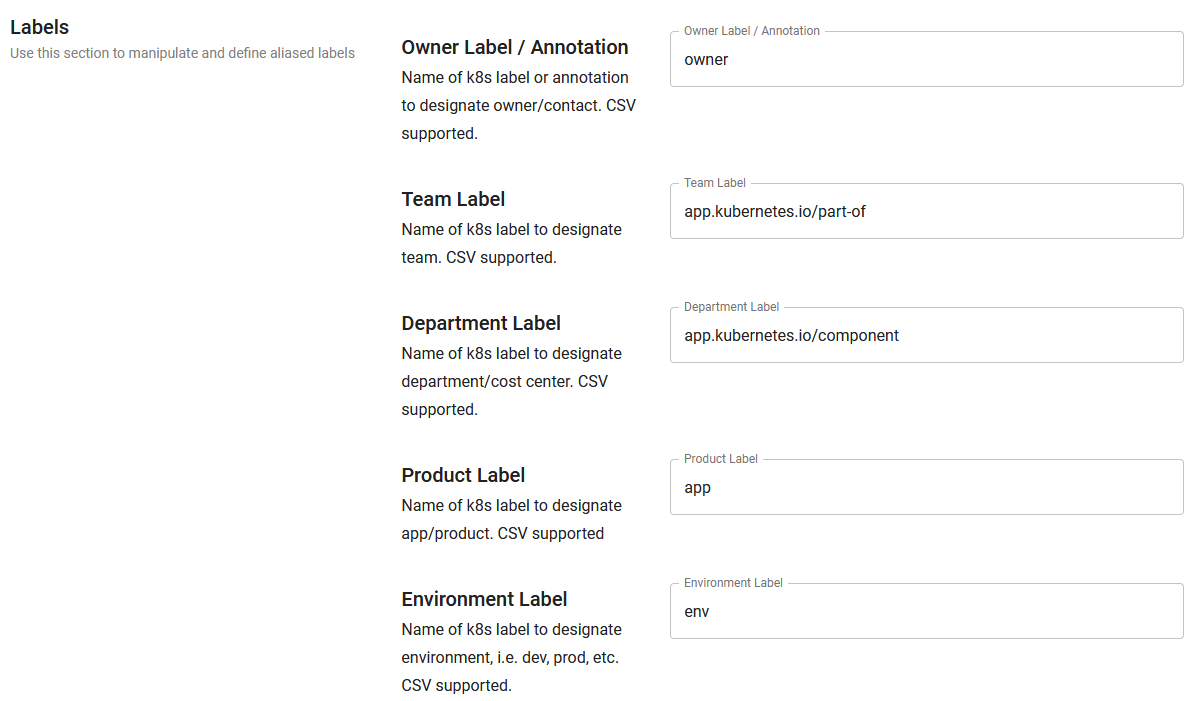

Kubecost natively provides cloud cost visibility but goes a step further by indicating which of these cloud resources can be attributed back to one of your Kubernetes clusters. Shown here is an example of the cloud costs for some AWS services, including Elastic Load Balancers (ELB) and what percentage of them are associated with a Kubernetes cluster.

Presented with this information, you might be able to go and identify where, why, and how these load balancers are in use across your estate, ultimately resulting in some decommissioning (reactive). But this can be taken a step further with policy-as-code through Kyverno by actively limiting the number and type of load balancers created in your cloud account (proactive). For example, many users would like to either prohibit the provisioning of these Kubernetes Services of type LoadBalancer altogether while others wish to restrict them to just one per Namespace. Neither one is possible in Kubernetes RBAC, so we can look to external policy to help us out. Let’s go with the single-per-Namespace strategy.

Below, you’ll find a Kyverno policy which limits the number of Services specifically of type LoadBalancer to one per Namespace. Notice that this style of policy is a bit more complex than the first as it is performing an API call to the Kubernetes API server to get information about the state of the cluster. The request to create a new Service must take existing Services into account, and this is something Kyverno does quite easily. Once fetching those existing Services, it simply does a count of the ones which are of type LoadBalancer and stores it in a variable. Whether to deny or allow the current request depends on the number in that variable.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: kubecost-loadbalancer-service

spec:

validationFailureAction: Enforce

background: true

rules:

- name: single-lb

match:

any:

- resources:

kinds:

- Service

operations:

- CREATE

context:

- name: lbservices

apiCall:

urlPath: /api/v1/namespaces/{{ request.namespace }}/services/

jmesPath: items[?spec.type=='LoadBalancer'] | length(@)

validate:

message: "Only a single Service of type LoadBalancer is permitted per Namespace."

deny:

conditions:

- key: "{{ lbservices }}"

operator: GreaterThanOrEquals

value: 1

Just as with the first use case, if we were to set this to Audit mode, we’d get a policy report providing visibility as to which Namespaces might be offenders of this policy. By setting to Enforce, we take steps to proactively prevent the creation of more than a single Service of type LoadBalancer in a given Namespace, helping to prevent cost overrun before it begins.

A user attempting to create a second such Service in a given Namespace would be greeted with the following error.

Error from server: error when creating "service.yaml": admission webhook "validate.kyverno.svc-fail" denied the request:

resource Service/default/my-service was blocked due to the following policies

kubecost-loadbalancer-service:

single-lb: Only a single Service of type LoadBalancer is permitted per Namespace.

In this use case, we’ve seen how we can leverage policy-as-code to complement the visibility provided by Kubecost to help save on costs up front. In the third and final use case, we’ll level this up even further with predictive budgetary cost controls!

Proactive Budget Control

By this point, you’re fully acquainted with the types of cost drivers and how moving from reactive reporting to proactive enforcement can be a game changer when it comes to realizing savings. The two previous use cases covered visibility and enforcement, but in this last use case we’ll look at how to achieve nirvana by using a combination of some innovative features in Kubecost Enterprise to assess and prevent the majority of your in-cluster spend based upon your governance standards, with the assistance of Kyverno.

Most of the money you spend on a Kubernetes environment is going to be driven by, no great surprise here, the workloads actually running on those clusters. Most forms of savings opportunities will be driven off of that reality. In order to get in front of that, we can prevent these workloads from being admitted based upon the cost they may incur. To assist with this, Kubecost provides two powerful features we will explore: predictions and budgets.

Because Kubecost is collecting granular data on your Kubernetes clusters, it understands what your workloads currently cost. Since it knows what workloads currently cost and how they are configured, it is not too big of a stretch to predict what an incoming, similarly-configured workload will cost. Through the use of Kubecost predictions, this is exactly what Kubecost can tell you. Predictions are available using the kubectl-cost plugin as well as directly via the API. When the predict capability is invoked, a proposed resource such as a Kubernetes Deployment is submitted to Kubecost. Kubecost will then predict what that resource will cost based upon its historic knowledge. This information can be hugely valuable as you’ll soon see.

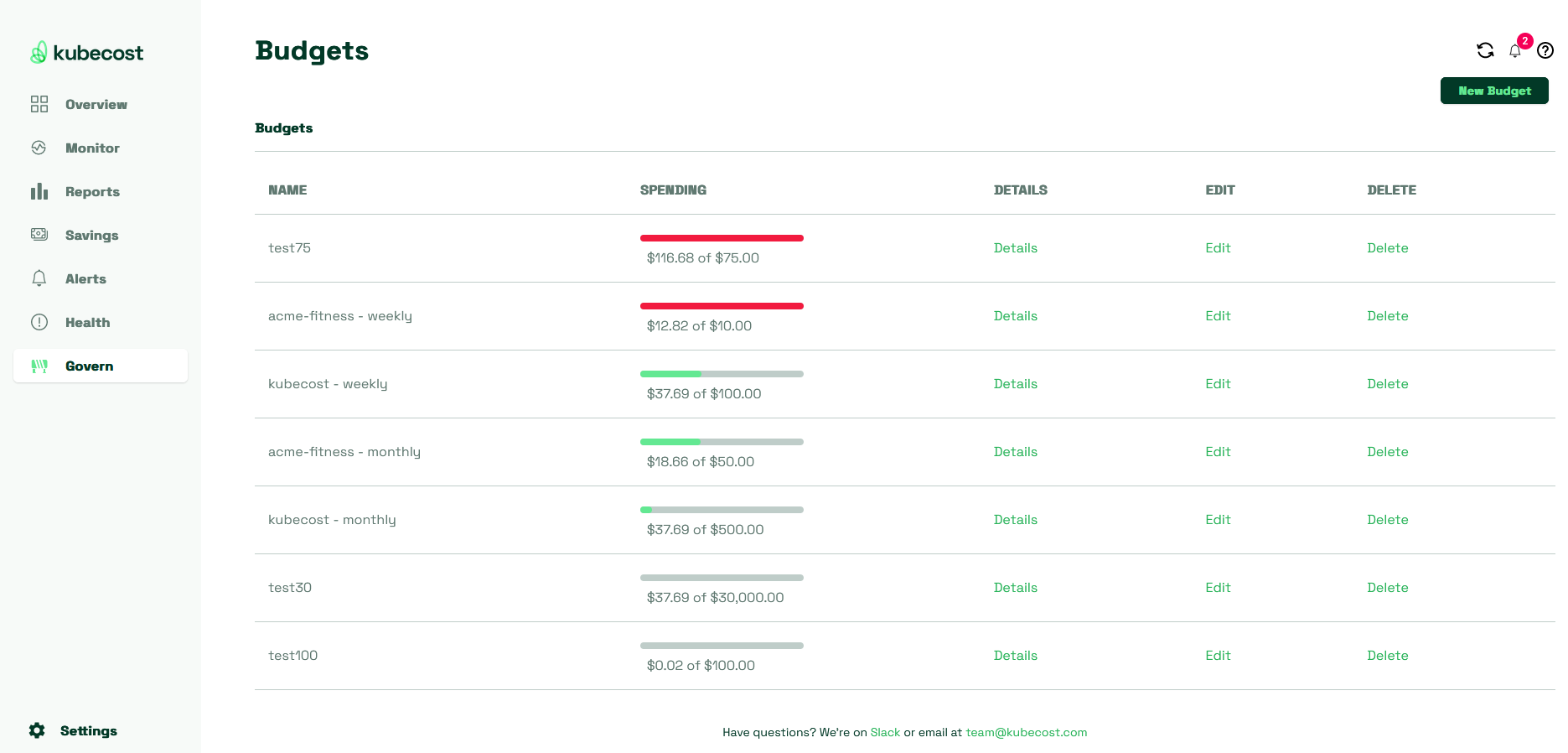

The second capability is Kubecost budgets. A budget allows you to define a maximum budget for allocated costs based on some organizational grouping and when that budget resets. For example, the ability to say the engineering Namespace has a budget of $100 every month which resets on the first of each month. As time goes on and Kubecost continues to gather data on the workloads in that Namespace, the budget fills up.

Actions can also be associated with budgets allowing you to send an email or webhook if the budget is within a certain defined percentage of its total.

Budgets in Kubecost are extremely powerful not just because of these automated actions, which can help notify when costs are going too high, but because they centrally organize aspects of your cost governance.

The final step here is to leverage both predictions and budgets in order to make an active decision, in the cluster at the time new resources are created, about whether they should be allowed or denied. For example, if the acme-fitness Namespace is already over its allotted budget for the week as shown in the previous screenshot, you probably don’t want to allow creation of new workloads there which would push it even further over budget. Similarly, if a single Deployment is predicted to cost you, say, ninety percent of that Namespace’s budget, it’s probably not something you want to allow. Through a combination of these Kubecost features and Kyverno, they can work together to combine reactive controls with proactive controls and deliver highly-effective savings in real-time, before anything has been spent.

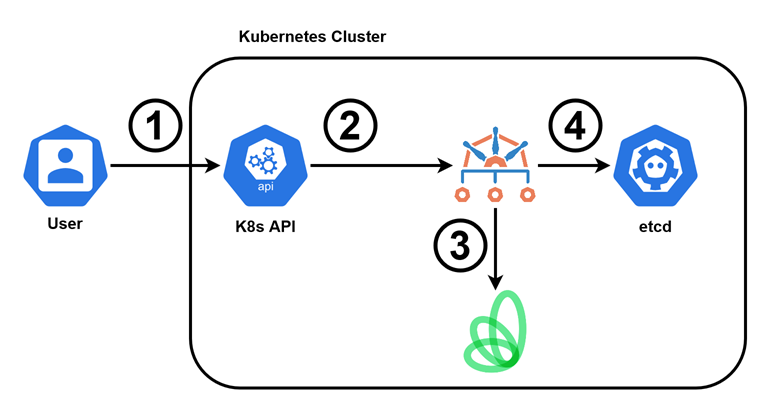

A visual depiction of this flow is shown underneath.

- A user (or process) submits the request to create a new Deployment to the Kubernetes API server.

- The API server forwards the request on to Kyverno.

- Kyverno requests cost prediction and budget information from Kubecost based on the Deployment and its Namespace.

- If the budget will not be overrun, Kyverno allows the resource to be created in the cluster; if not, it is denied and blocked.

Let’s see how this is implemented in Kyverno in the below policy. It’s a lot to take in but comments have been added to hopefully make this a bit easier to digest.

What we want to say here is, “for Namespaces with a Kubecost budget, only allow new Deployments if they will not overrun the remainder of that budget.”

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: kubecost-governance

spec:

validationFailureAction: Enforce

rules:

- name: enforce-monthly-namespace-budget

match:

any:

- resources:

kinds:

- Deployment

operations:

- CREATE

# First, check if this Namespace is subject to a budget.

# If it is not, always allow the Deployment.

preconditions:

all:

- key: "{{ budget }}"

operator: NotEquals

value: nobudget

context:

# Get the budget of the destination Namespace. Select the first budget returned which matches the Namespace.

# If no budget is found, set budget to "nobudget".

- name: budget

apiCall:

method: GET

service:

url: http://kubecost-cost-analyzer.kubecost:9090/model/budgets

jmesPath: "data[?values.namespace[?contains(@,'{{ request.namespace }}')]] | [0] || 'nobudget'"

# Call the prediction API and pass it the Deployment from the admission request. Extract the totalMonthlyRate.

- name: predictedMonthlyCost

apiCall:

method: POST

data:

- key: apiVersion

value: "{{ request.object.apiVersion }}"

- key: kind

value: "{{ request.object.kind }}"

- key: spec

value: "{{ request.object.spec }}"

service:

url: http://kubecost-cost-analyzer.kubecost:9090/model/prediction/speccost?clusterID=cluster-one&defaultNamespace=default

jmesPath: "[0].costChange.totalMonthlyRate"

# Calculate the budget that remains from the window by subtracting the currentSpend from the spendLimit.

- name: remainingBudget

variable:

jmesPath: subtract(budget.spendLimit,budget.currentSpend)

validate:

message: >-

This Deployment, which costs ${{ round(predictedMonthlyCost, `2`) }} to run for a month,

will overrun the remaining budget of ${{ round(remainingBudget,`2`) }}. Please seek approval or request

a Policy Exception.

# Deny the request if the predictedMonthlyCost is greater than the remainingBudget.

deny:

conditions:

all:

- key: "{{ predictedMonthlyCost }}"

operator: GreaterThan

value: "{{ remainingBudget }}"

Kyverno will do the following:

- Listen for the creation of any Deployment in the cluster.

- Ask Kubecost for a list of budgets.

- If a budget is found for the corresponding Namespace, continue; otherwise, allow the request unconditionally.

- Find out what the remaining amount is in that budget.

- Compare the predicted monthly cost of the Deployment to the remaining budget. If the predicted cost is greater, deny the request.

Notice here how the Kyverno policies are getting incrementally more complex. Kyverno is a very powerful system which allows great flexibility and control over your policy desires. One such powerful capability on display here is the ability to call out to any system that can speak JSON over REST, such as Kubecost’s API.

Let’s now see this in action end-to-end.

Suppose we had a developer who wanted to create a Deployment which looked like the below in a Namespace called foo. The foo Namespace happens to have a Kubecost budget created for it (which the developer may not even know).

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 4

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

resources:

requests:

cpu: 800m

memory: 400Mi

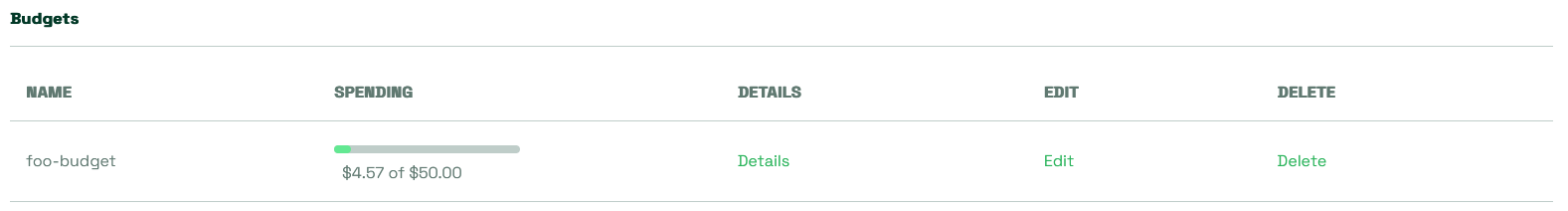

You, as the Kubecost administrator, have defined a monthly budget of $50 for this Namespace and you’re currently $4.57 into it for the month.

Let’s see what happens if we try to create this Deployment in the foo Namespace.

$ kubectl -n foo create -f deploy.yaml

Error from server: error when creating "deploy.yaml": admission webhook "validate.kyverno.svc-fail" denied the request:

resource Deployment/foo/nginx was blocked due to the following policies

kubecost-governance:

enforce-monthly-namespace-budget: This Deployment, which costs $78.68 to run for

a month, will overrun the remaining budget of $45.43. Please seek approval or request

a Policy Exception.

We can see in the returned message that Kyverno asked Kubecost if there was a budget, and there was so it then asked it to predict the cost. That prediction ended up being over what the remainder of the budget was and so Kyverno blocked its creation.

You might have noticed the last part of the message referring to a “Policy Exception”. Kyverno has another ability in which users may request exemption from a particular policy such as this in a way which is Kubernetes native. For more information, see Policy Exceptions in the official documentation.

Let’s see how we can make this cheaper. We have basically two options: reduce the number of replicas or reduce the amount of requested resources. We’ll dial back on the number of replicas from four to three.

spec:

replicas: 3

Try to create the Deployment again and we’re met with a similar denial but with different numbers.

$ kubectl -n foo create -f deploy.yaml

Error from server: error when creating "deploy.yaml": admission webhook "validate.kyverno.svc-fail" denied the request:

resource Deployment/foo/nginx was blocked due to the following policies

kubecost-governance:

enforce-monthly-namespace-budget: This Deployment, which costs $59.01 to run for

a month, will overrun the remaining budget of $45.43. Please seek approval or request

a Policy Exception.

We’re heading in the right direction but it’s still too expensive. Let’s leave the replica count at three but request just a touch less CPU. How about 600 millicores (600m) rather than 800? The final Deployment now looks like this.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

resources:

requests:

cpu: 600m

memory: 400Mi

Give it a shot and see.

$ kubectl -n foo create -f deploy.yaml

deployment.apps/nginx created

And, there we go! The cost to run this Deployment was less than or equal to the remaining monthly budget and so was admitted.

Note that if you’re attempting this on your end with a fresh installation of Kubecost, you may need to wait up to 48 hours for Kubecost to have gathered enough data to provide cost predictions.

By the way, remember that PolicyReport from the labels use case? You could set this policy for proactive budget controls to Audit rather than Enforce if you are only (or initially) interested in just providing visibility. The corresponding PolicyReport will then show the costs and budgetary numbers at the time the “bad” Deployment was created.

results:

- message: This Deployment, which costs $98.35 to run for a month, will overrun the

remaining budget of $97.64. Please seek approval or request a Policy Exception.

policy: kubecost-governance

result: fail

rule: enforce-monthly-namespace-budget

scored: true

source: kyverno

timestamp:

nanos: 0

seconds: 1702657110

Hopefully you can see how incredibly useful the combination of predictions and budgets in Kubecost can be, but how it is truly leveled up when adding Kyverno to ensure proactive cost control is maintained. The ability for admission controllers like Kyverno to request cost predictions is new to Kubecost 1.108 along with budgets based on labels, so if this piqued your interest you’ll want to get on this latest release.

Closing

Having complete control on what you spend requires not only good reactive monitoring and visibility, but going a step further by proactively preventing cost overruns before they occur. By leveraging these two best-in-class tools, Kubecost for cost visibility and monitoring, and Kyverno for policy-as-code, you can really begin to realize some massive savings by placing your organization’s financial guardrails around your Kubernetes environments.

For these and other Kyverno policies which can help save you time and money, check out the extensive Kyverno policy library here which has other Kubecost policies, including some not covered, ready to go.

About

Chip Zoller  is a solutions architect at Kubecost where he focuses on finding and building ecosystem integrations which show the full power of Kubecost. He is also a maintainer of the open source Kyverno project. Chip has presented at multiple KubeCon and other conferences on a wide array of cloud native technologies, including Kyverno. He lives in Kentucky with his wife and two rescue dogs.

is a solutions architect at Kubecost where he focuses on finding and building ecosystem integrations which show the full power of Kubecost. He is also a maintainer of the open source Kyverno project. Chip has presented at multiple KubeCon and other conferences on a wide array of cloud native technologies, including Kyverno. He lives in Kentucky with his wife and two rescue dogs.